Short Communication

Short Communication

Robots in Town – Low-Cost Automated Logistic Concept

Swaraj Tendulkar, Venkata Prashanth Uppalapati, Harshavardhan reddy Busireddy, Yekaterina Strigina, Tarun Parmar and Frank Schröde*

Schmalkalden University of Applied Sciences, Germany

Frank Schrödel, Schmalkalden University of Applied Sciences, Germany.

Received Date: February 24, 2023; Published Date:March 06, 2023

Short Communication

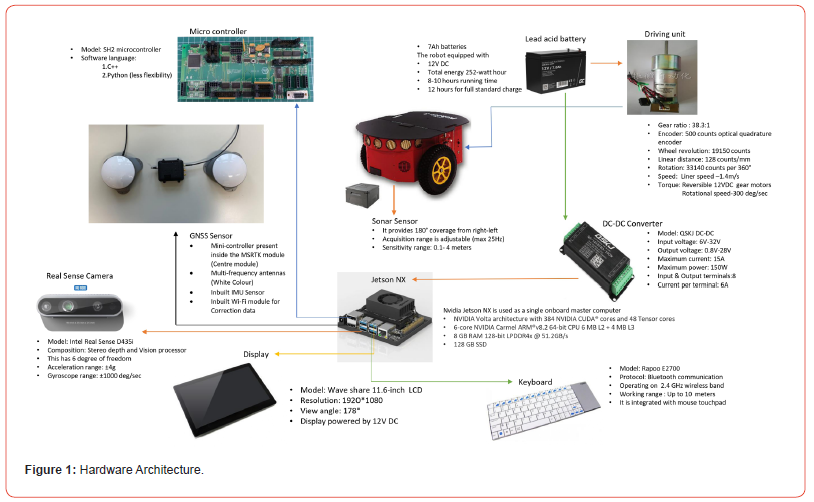

In this new age, there is constant evolution to push the boundaries of technology and to discover new horizons. The field of Robotics has always been regarded as a technology of the future with its applications ranging from production to the mobility sector. Autonomous Mobility has always been viewed as a technology which can bring a revolution in transport as well as logistics. With the aim to improve logistics, the Schmalkalden University is developing a solution which allows a low-cost mobile robot to carry products from a supermarket to the neighboring locations, according to [1]. The robot in development is a P3DX pioneer robot which works on differential drive for its turning. Following description of components is as per Figure 1. It houses a Microcontroller SH2 unit. This works as a Lower-Level Controller to receive inputs from the High-Level Controller. In our case, the High- Level Controller being used is the Nvidia Jetson Nano. The robot houses a variety of sensors like wheel encoders, IMU, RealSense d435i Depth Camera, 360ᵒ Sonar and GNSS sensor. Algorithms for the sensor data exchange are implemented on the Jetson, utilizing ROS Noetic in Ubuntu 20.04. For a robust application, it is necessary to impart sensor fusion for a variety of environment scenarios. Mapping for the environment can be done through Real Time Appearance Based Mapping using the Intel RealSense Depth Camera. A point cloud of the environment can be created and a plethora of topics are published by the camera including the image data, IMU data, pose Information and point cloud data. In outdoor environment, we also need to localize the robot via highly precise GNSS. For localization in the outdoor environment, we employ a high precision GNSS sensor from the company ANavS which has a base station. The second component is mounted on the robot for obtaining localization coordinates. These precise coordinates help us to determine the position of the robot for the path it takes in the environment which can be used for further path planning and control. According to [2] we found a low-cost substitute to the laser scanner.

Furthermore, there is an attempt to involve the concepts of Deep Learning for better understanding of the environment and use the camera to its best potential. For our use case, we need to detect certain objects i.e., pedestrians, cars, pets, etc. which are going to be a part of the environment where the robot drives. Subsequently, it is important to record the distance of these objects from the robot to provide the necessary control action. To deal with this challenge, YOLO algorithm is used to detect these objects and the object names are as per the coco dataset. A YOLO algorithm uses the capabilities of the GPU and for it to work flawlessly, we need CUDA and TensorFlow capable GPUs. The scripting is done in Python. Moreover, the robot is using Sonar data in order to determine occupied areas and to plan a path around them and adapt the vehicle velocity accordingly. The camera image classification is also to create situation depending tactical planning solutions i.e., crosswalks, zebra crossings, etc (Figure 1 & 2).

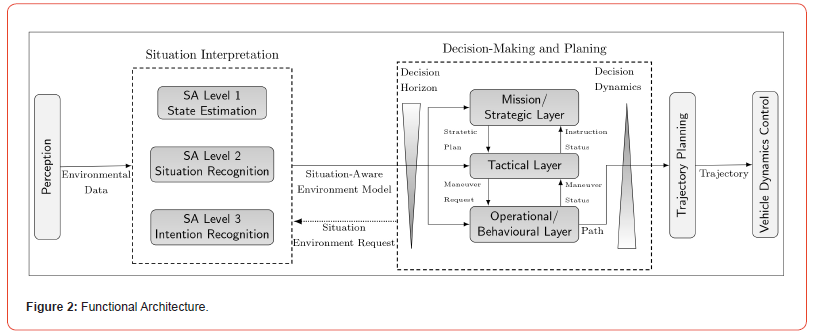

The project aims to handle specific scenarios and involve tactical planning in such situations which is described in the functional architecture in Figure 2. As depicted in the above flow chart, the camera sensor perceives the environmental data and interprets the situation on 3 levels. At the first level it recognizes the state of the robot when it approaches the situation. Furthermore, specific situations are pre-defined e.g. when the robot approaches the cross-walk which allows the robot to recognize it and follow the pre-defined operation programmed. To deal with such a complex task we have the Decision Planning Block consisting of the Tactical Layer which takes input from the Strategic Layer for planning the maneuver and provides the input to the Operational Layer for maneuver request. All these functions are processed for optimal trajectory planning and optimize the trajectory. For more detail, please refer to [3].

The algorithms for the vehicle dynamic control are developed in MATLAB/Simulink. An important feature to consider is that MATLAB enables communication with ROS. Hence, any data recorded from the sensors can be published and thereby subscribed by MATLAB/ Simulink as an input to the control algorithms. Subsequently, the algorithms developed in MATLAB/Simulink can be tested in simulation in Gazebo Simulation Software. The environment model can be created in Gazebo and the robot model can be driven in the environment as per the control algorithm. In our case, we are using a classical Pure Pursuit Controller for its suitability to provide control action in a differential drive scenario. For the algorithm to work, we need a test map of the environment. We can create this map by using Intel camera to create a map of the environment by running the robot in it. The .pgm file and .yaml file of the map can be processed and a binary occupancy grid can be generated in MATLAB and given as an input to the Simulink model for effective Path Planning and Control action. This map serves as a global map and is unresponsive to dynamic changes in the environment.

Ultimately, these separate modules need to work in tandem to provide an autonomous logistic solution. The scope of this project is immense when we consider the number of warehouses and supermarkets all over the world. A successful implementation of this technology can help humans and robots to work closely to reduce human effort in terms of carrying the weight. Working with such sensors and algorithms, data management and processing is an important area which should be addressed. With large amounts of incoming data, we need to filter out which data is beneficial to us and should be processed for further applications. Running the algorithms simultaneously requires high performing CPUs and GPUs which can be costly. The major challenge posted is finding the most robust solutions as the environment is highly dynamic and is subjected to changes. Hence, to ensure the robot can adapt and handle these changes to fulfill its goal is a challenge which has to be tackled by intensive testing in different environments. Weather changes can also be a challenge and it will be interesting to see how we can tackle these challenges in future.

To realize the goal of the project, the intermediate step is to consider the human factors as a key enabler. As the robot is going to drive in the vicinity of the humans, we need to take into account the acceptance of the people to the presence of the robot, as discussed for in-door robots in [4,5]. Along with the acceptance criteria, it is important to understand what kind of movement goes down well with the people walking in the vicinity of the robot. We can get a better idea about this with the help of extensive measurement campaigns, resulting in comprehensive data sets (managed in MARVS) during trial runs in public out-door test environments. Recently the team has been working towards these measurement campaigns in the city of Gera and Freiberg to receive a first understanding of the acceptance criteria in the outdoor environments.

Acknowledgement

We gratefully acknowledge the financial support for part of this research by the Federal Ministry for Digital Affairs and Transport under the grant 19F1117A (mFUND funding program).

Conflict of Interest

No Conflict of interest..

References

- Martin Plank, Clément LeGarde, Tom Assmann, Sebastian Zug (2022) "Ready for robots? Assessment of autonomous delivery robot operative accessibility in German cities." Journal of Urban Mobility 2: 100036.

- Sebastian Zug, Felix Penzlin, André Dietrich, Tran Tuan Nguyen, Sven Albert, et al. (2012) Are laser scanners replaceable by Kinect sensors in robotic applications? 2012 IEEE International Symposium on Robotic and Sensors Environments Proceedings. IEEE.

- R Voßwinkel, M Gerwien, A Jungmann, F Schrödel (2021) Intelligent Decision Making and Motion Planning for Automated Vehicles in Urban Environments. In: Autonomous Driving and Advanced Driver-Assistance Systems (ADAS): Applications, Development, Legal Issues, and Testing, Taylor & Francis Group / CRC Press.

- Siebert FW, Pickl J, Klein J, Rötting M, Roesler E (2020) Let’s Not Get Too Personal–Distance Regulation for Follow Me Robots. In International Conference on Human-Computer Interaction (pp. 459-467). Springer, Cham.

- Siebert FW, Klein J, Rötting M, Roesler E (2020) The influence of distance and lateral offset of follow me robots on user perception. Frontiers in Robotics and AI 7: 74.